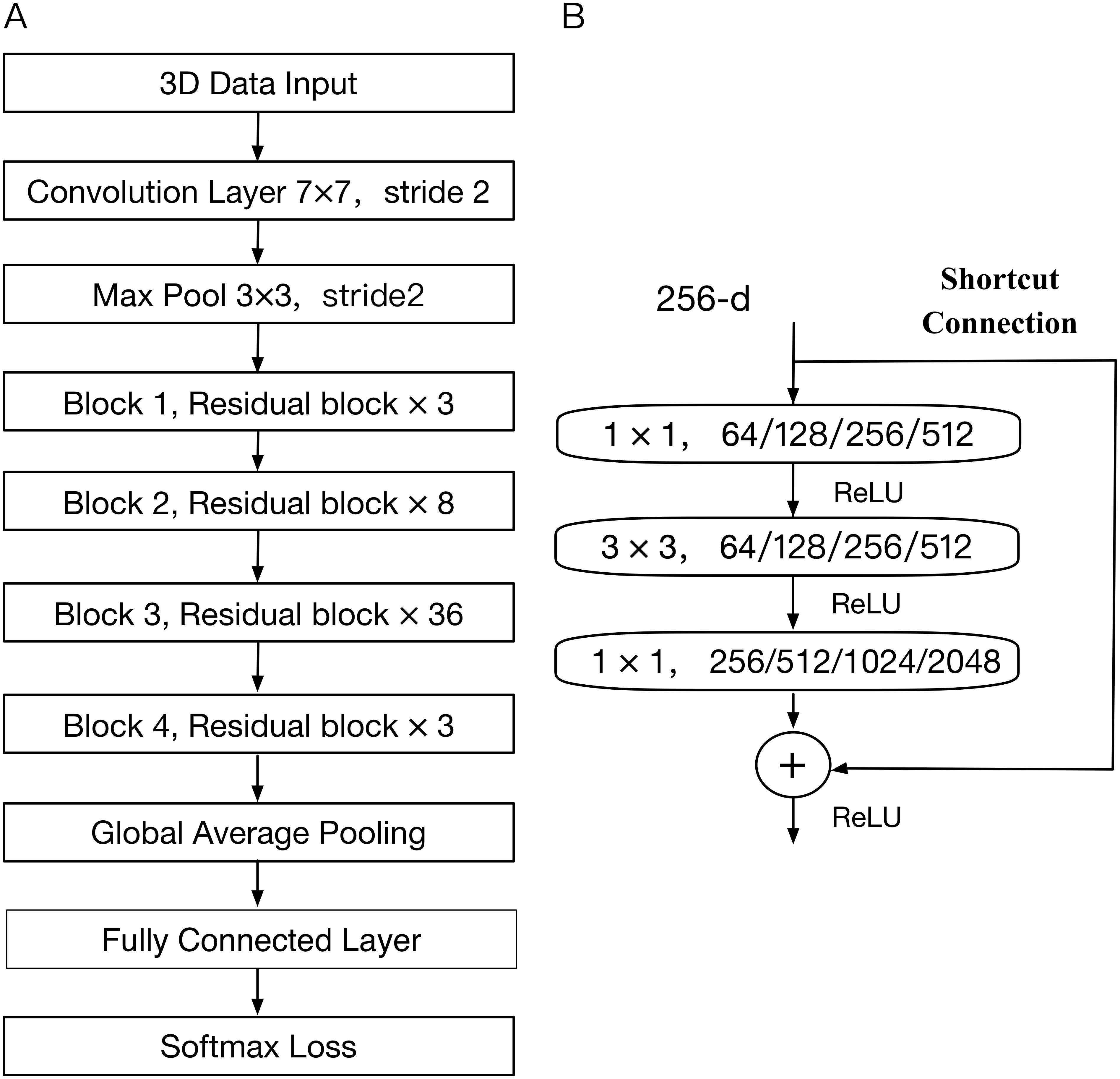

As a result, it has 14 skip connections from both the streams or inputs to the encoders. The generator G is constructed based on a U-form network. The generator G of the WNet_cGAN network, as shown in figure 1, consists of two encoders, E1 and E2, concatenated at the top layer, and a common decoder, which integrates information from two different modalities and generates refined DSM, or in the case of the dataset used in the paper, LoD2-like DSM with refined building shapes. The Generator (G) consists of two encoders and a common decoder, which admits two inputs, stacked with a Discriminator, which is a patch-GAN type architecture that discriminates between Real and Fake labels. The architecture of WNet_cGAN consists of a Generator (G) and a Discriminator (D). Further improvements to the model were made in another paper, by changing the generator architecture and introducing a normal vector loss in, addition to the previous losses. WNet_cGAN was first introduced in paper, and was originally used for the refinement of stereo DSM using panchromatic imagery to generate LOD2 building DSMs. cGANs (Conditional GANS) are trained on paired sets of images or scenes from two domains to be used for translation. WNet_cGAN is a type of conditional GAN (Generative Adversarial Networks). To follow the guide below, we recommend you read this guide about How Pix2Pix works?.

This approach can help in the extraction of refined building structures with higher levels of detail from a raw DSM and high resolution imagery for urban cities. This model was developed to refine or extract level of details 2 (LoD2) Digital Surface Model (DSM) for buildings from previously available DSM using an additional raster, such as panchromatic (PAN) imagery. In this guide, we will focus on WNet_cGAN, which blends spectral and height information in one network. Image-to-Image translation is defined as the task of translating from one possible representation of the scene to another, given the amount of training data from two sets of scenes.

0 kommentar(er)

0 kommentar(er)